本文是来自ETH的Marcin Copik在IPDPS’ 23上发表的工作。

Abstract: High performance is needed in many computing systems, from batch-managed supercomputers to general-purpose cloud platforms. However, scientific clusters lack elastic parallelism, while clouds cannot offer competitive costs for highperformance applications. In this work, we investigate how modern cloud programming paradigms can bring the elasticity needed to allocate idle resources, decreasing computation costs and improving overall data center efficiency. Function-as-aService (FaaS) brings the pay-as-you-go execution of stateless functions, but its performance characteristics cannot match coarse-grained cloud and cluster allocations. To make serverless computing viable for high-performance and latency-sensitive applications, we present rFaaS, an RDMA-accelerated FaaS platform. We identify critical limitations of serverless - centralized scheduling and inefficient network transport - and improve the FaaS architecture with allocation leases and microsecond invocations. We show that our remote functions add only negligible overhead on top of the fastest available networks, and we decrease the execution latency by orders of magnitude compared to contemporary FaaS systems. Furthermore, we demonstrate the performance of rFaaS by evaluating real-world FaaS benchmarks and parallel applications. Overall, our results show that new allocation policies and remote memory access help FaaS applications achieve high performance and bring serverless computing to HPC.

Entry:Zotero link URL link Repo

rFaaS(IPDPS 23')

- 思路:

- 借助FaaS的细粒度短时间运行的优势,提高资源利用率

- 删去中心调度器,简化最优路径,减少延迟

- RDMA加速数据通信

- 内容:

- 一套可以支持MPI的编程框架和FaaS的运行框架 开源repo

- 结论:

- 相比MPI提供$1.88\times$加速比

- 不足:

- SPMD范式要求单程序多入口,整个依赖需要上传并copy多份,大依赖存在开销(但是如果扩缩容做得好或者通过预热,这也不算大问题)

- 继承了原生MPI的缺点:

- 需要自主进行并行化:难编程、难调试

- 缺乏动态性:在运行时很难动态地添加或删除进程,自适应的并行计算变得困难。(相反,这本来是FaaS的优势)

Motivation

- What’s the high level problem?

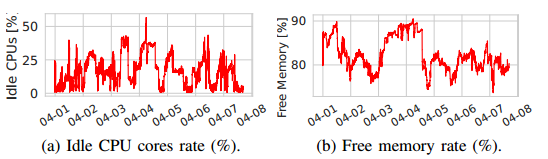

- Low Resources Utilization: In highly competitive and batch-managed supercomputers, the average utilization of nodes varies between 80% and 94%. Furthermore, on average three-quarters of the memory in HPC nodes is not utilized.

- Why is it important?

- Low resource utilization has always affected data centers and it had a vast impact on the financial efficiency of the system: wasted capital investments into idle resources and increased operating costs, as the energy usage of servers doing little and no work is more than 50% of their peak power consumption

- What is missing from previous works?

- While the elastic parallelism of FaaS has been used in compute-intensive workloads such as data analytics, video encoding, and machine learning training, it has only gained minor traction so far in high-performance and scientific computing due to a lack of low-latency communication and optimized data movement.

Design

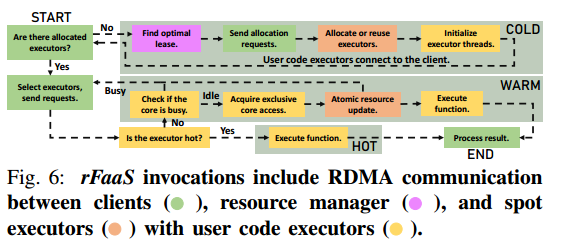

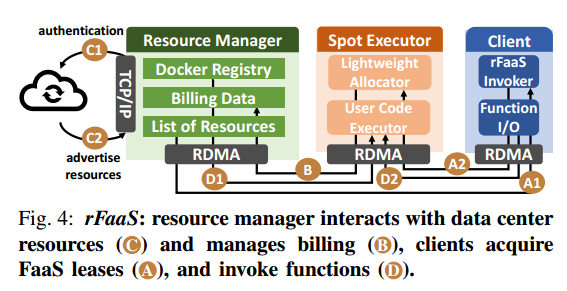

- 去中心化的调度:

- 执行模式:

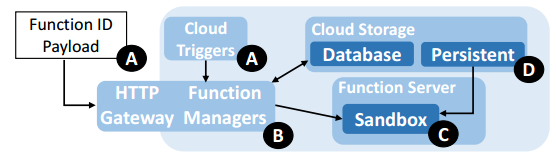

- 流程图:

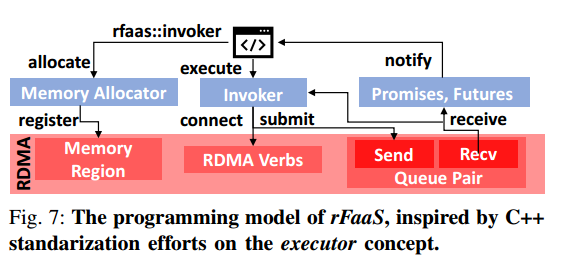

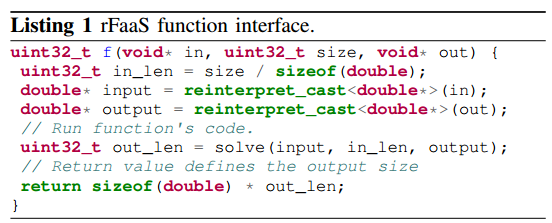

- 接口:

Clue

- Why FaaS?

Since the idle nodes are available for a short time (Fig. 2a), opportunistic reuse

for other computations must be constrained to short-running workloads.

- Why removing the centralized cloud proxies from invocations? The multi-step invocation path is a barrier to achieving zero-copy and fast serverless acceleration.

- Why RDMA? Connection latency and bandwidth are the fundamental bottlenecks for remote invocations, yet serverless platforms do not take advantage of modern network protocols.

Last modified on 2023-10-06